seffĪfter the job completes, you can run seff to get some useful information about your job, including the memory used and what percent of your allocated memory that amounts ~]$ seff-array 43283382 Slurm records statistics for every job, including how much memory and CPU was used. Please see our guide on how to set up and use ClusterShell.

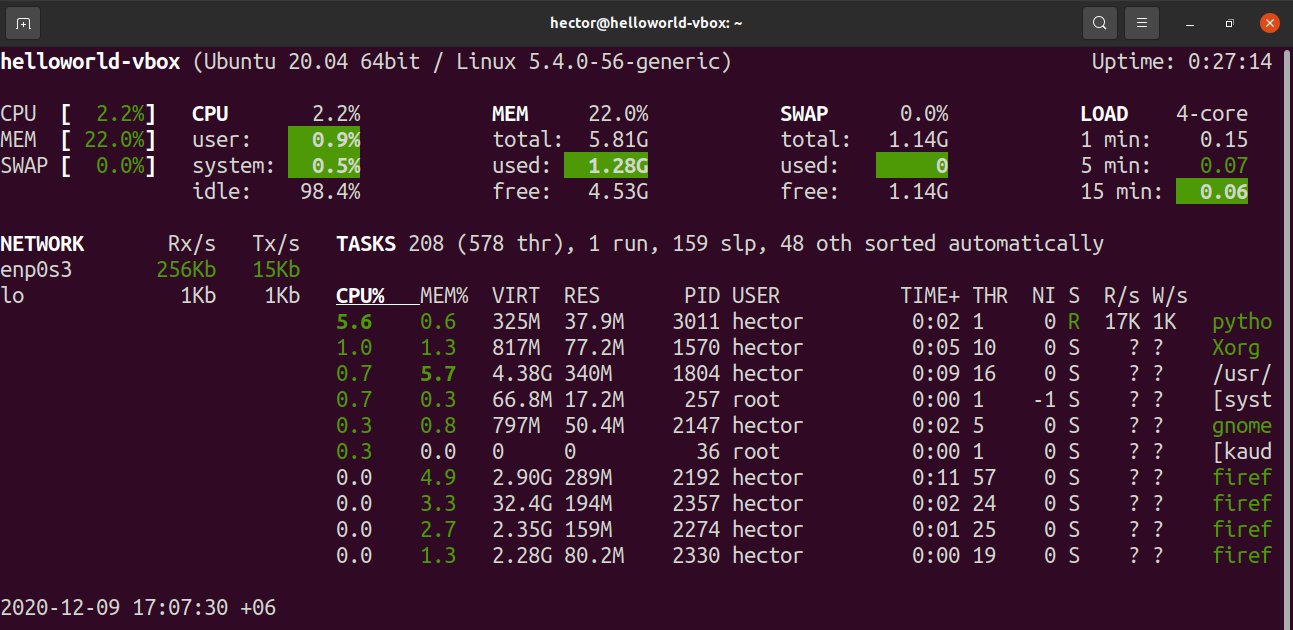

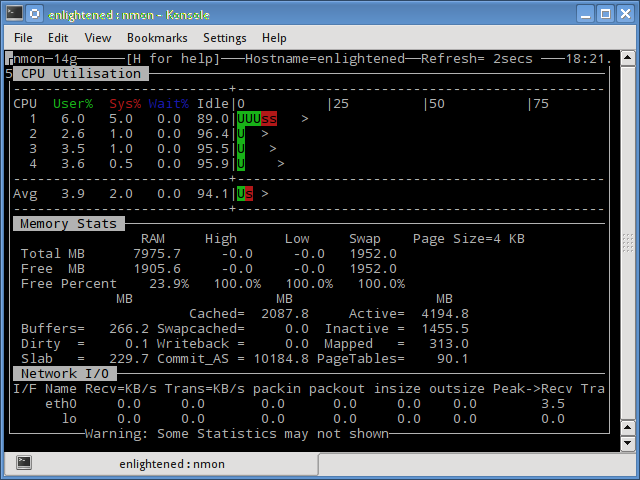

You can press ? for help and q to quit.įor multi-node jobs clush can be very useful. In the case below, the YEPNEE.exe programs are each consuming ~600MB of memory and each fully utilizing one CPU. For Memory usage, the number you are interested in is RES. You can press u, enter your netid, then enter to filter just your processes. Top runs interactively and shows you live usage statistics. They are also using most of 5 cores, so future jobs like this should request 5 CPUs. Ps reports memory used in kilobytes, so each of the 5 matlab processes is using ~77GiB of RAM. To find the node you should ssh to, ~]$ ps -u$USER -o %cpu,rss,argsĩ2.6 79446140 /gpfs/ysm/apps/hpc/Apps/Matlab/R2016b/bin/glnxa64/MATLAB -dmlworker -nodisplay -r distcomp_evaluate_filetaskĩ4.5 80758040 /gpfs/ysm/apps/hpc/Apps/Matlab/R2016b/bin/glnxa64/MATLAB -dmlworker -nodisplay -r distcomp_evaluate_filetaskĩ2.6 79676460 /gpfs/ysm/apps/hpc/Apps/Matlab/R2016b/bin/glnxa64/MATLAB -dmlworker -nodisplay -r distcomp_evaluate_filetaskĩ2.5 81243364 /gpfs/ysm/apps/hpc/Apps/Matlab/R2016b/bin/glnxa64/MATLAB -dmlworker -nodisplay -r distcomp_evaluate_filetaskĩ3.8 80799668 /gpfs/ysm/apps/hpc/Apps/Matlab/R2016b/bin/glnxa64/MATLAB -dmlworker -nodisplay -r distcomp_evaluate_filetask The easiest way to check the instantaneous memory and CPU usage of a job is to ssh to a compute node your job is running on.

If your job is already running, you can check on its usage, but will have to wait until it has finished to find the maximum memory and CPU used.

To know how much RAM your job used (and what jobs like it will need in the future), look at the "Maximum resident set size" Running Jobs Minor (reclaiming a frame ) page faults: 30799 Maximum resident set size (kbytes ): 6328 Stress-ng: info: successful run completed in 10.08sĬommand being timed: "stress-ng -cpu 8 -timeout 10s"Įlapsed (wall clock ) time (h:mm:ss or m:ss ): 0:10.09 National ~ ]$ /usr/bin/time -v stress-ng -cpu 8 -timeout 10s

0 kommentar(er)

0 kommentar(er)